# Neural Networks

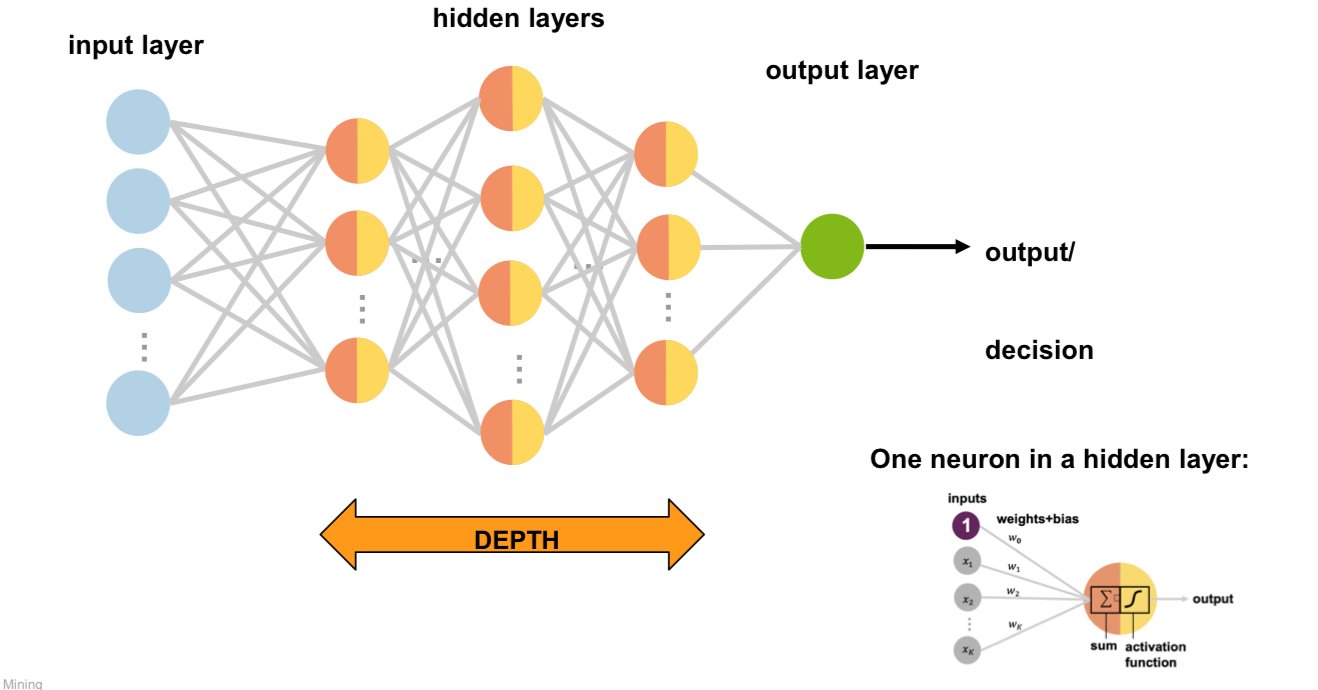

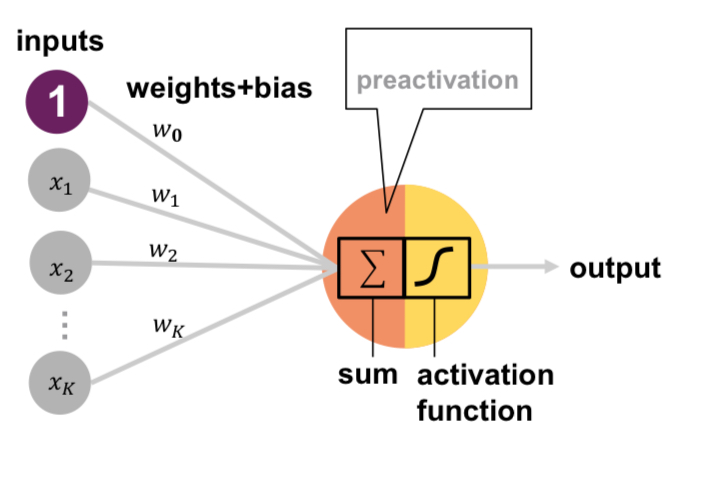

Neuron

Applies an activation function to the weighted sum of its inputs plus a bias term.

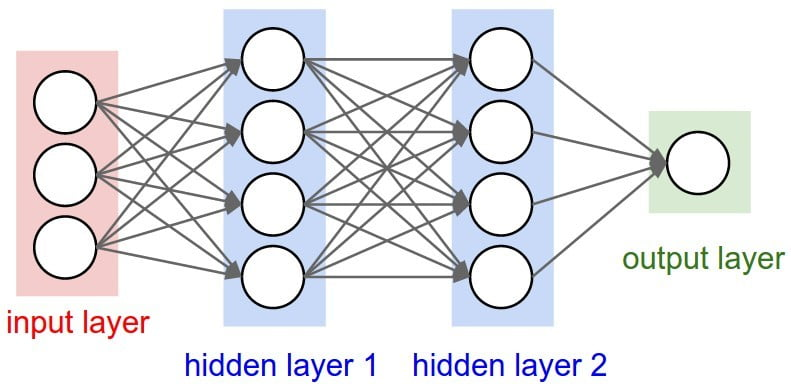

Deep Neural Networks

Are Neural Networks with more than one hidden layer.

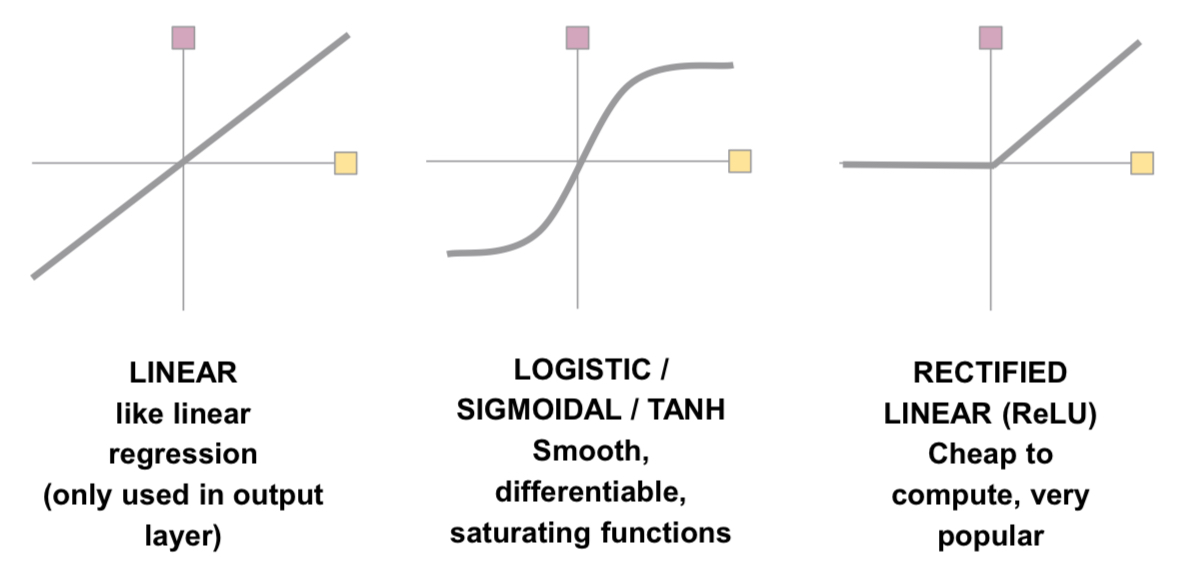

Activation Functions

An activation function in neural networks introduces non-linearity to the model by determining the output of each neuron. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid, helping the network learn complex patterns and relationships in data during training.

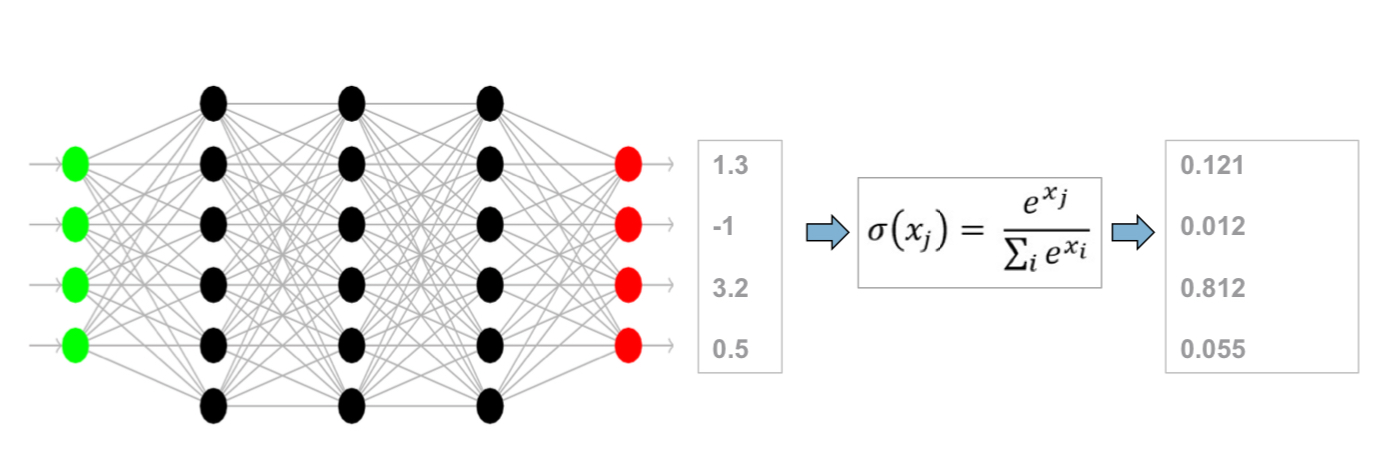

## Softmax

Goal: For Classification tasks with mutually exclusive classese, in the output layer we want to have 1.0 for the correct class and 0 for all other classes.

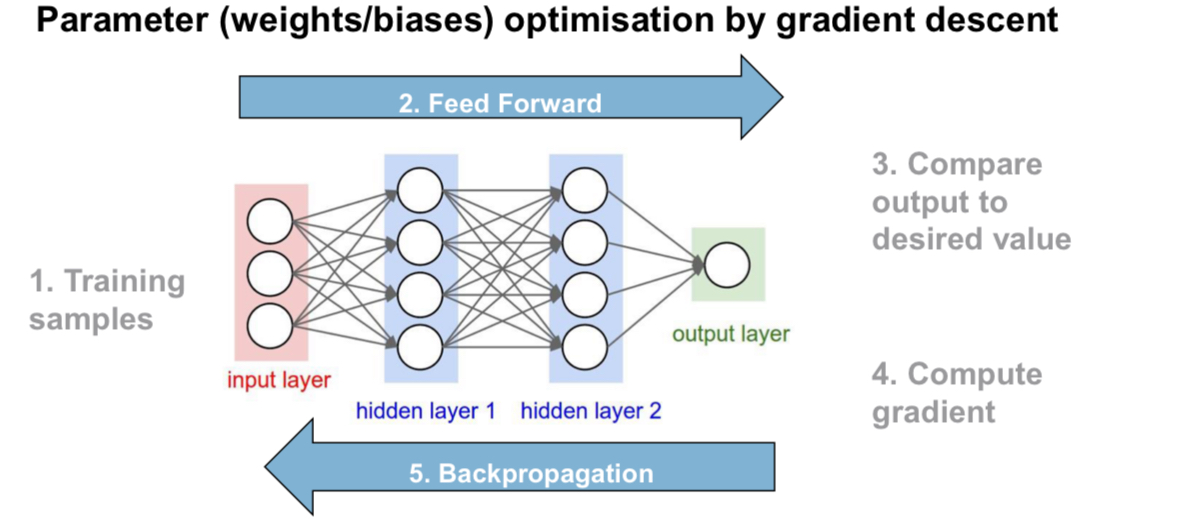

Training in Feedforward Neural Networks